Code Scoring

Overview

After analyzing your codebase and extracting code snippets, you can use Artemis's scoring functionality to evaluate the quality of these snippets based on various criteria. This helps you identify which parts of your code would benefit most from optimization or improvement.

The scoring system provides both pre-defined metrics and the ability to create custom scoring criteria, giving you flexibility in how you evaluate your code. You can score snippets individually or evaluate multiple snippets at once.

Score Code Snippets

Once code snippets are retrieved, you can use Artemis scorers to evaluate the quality of snippets based on pre-defined or custom criteria.

The retrieved code snippets can be scored one by one or all at once.

Across the platform, the "balance" icon represents the scoring options. See image:

![]()

To score a selected code snippet, click the balance icon next to that snippet. To score all of the code snippets that you have retrieved, click the balance icon at the top next to the Scores column title.

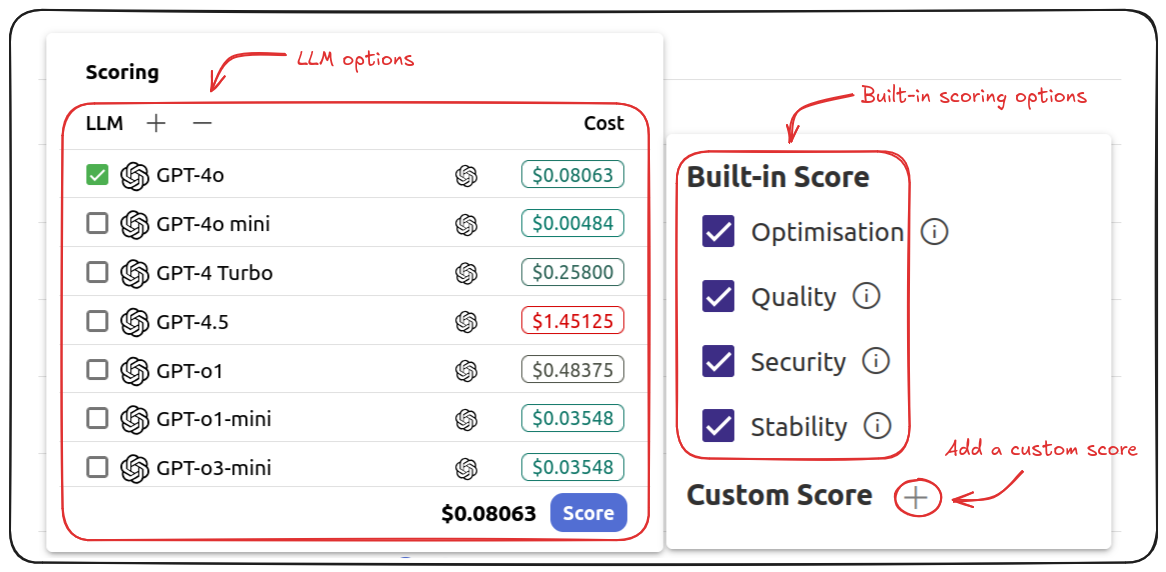

Clicking on the balance icon will give you a list of LLM and scoring criteria. See image for details:

Use the checkboxes under Scoring to select which LLMs you would like to use to generate the scores.

Using certain LLMs will include a cost. Pay attention to the Estimated Cost item at the bottom of the Scoring panel to ensure that you are aware of how much it would cost to run the scoring task.

Custom Scoring

Use the checkboxes under Built-in Score to select the criteria along which you would like to score your code.

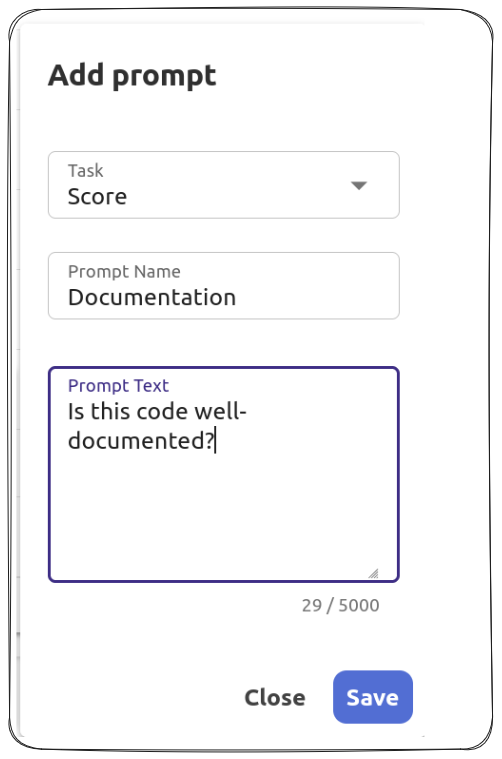

You are also able to define custom scoring metrics by clicking the Add button next to Custom Score.

Set your own Custom Score by giving the score name (for easy identification) and writing the criteria you would like to measure, using natural language prompts.

See the example below:

Once you set your prompts and select the models, click Score at the bottom of the Scoring panel to begin the scoring.

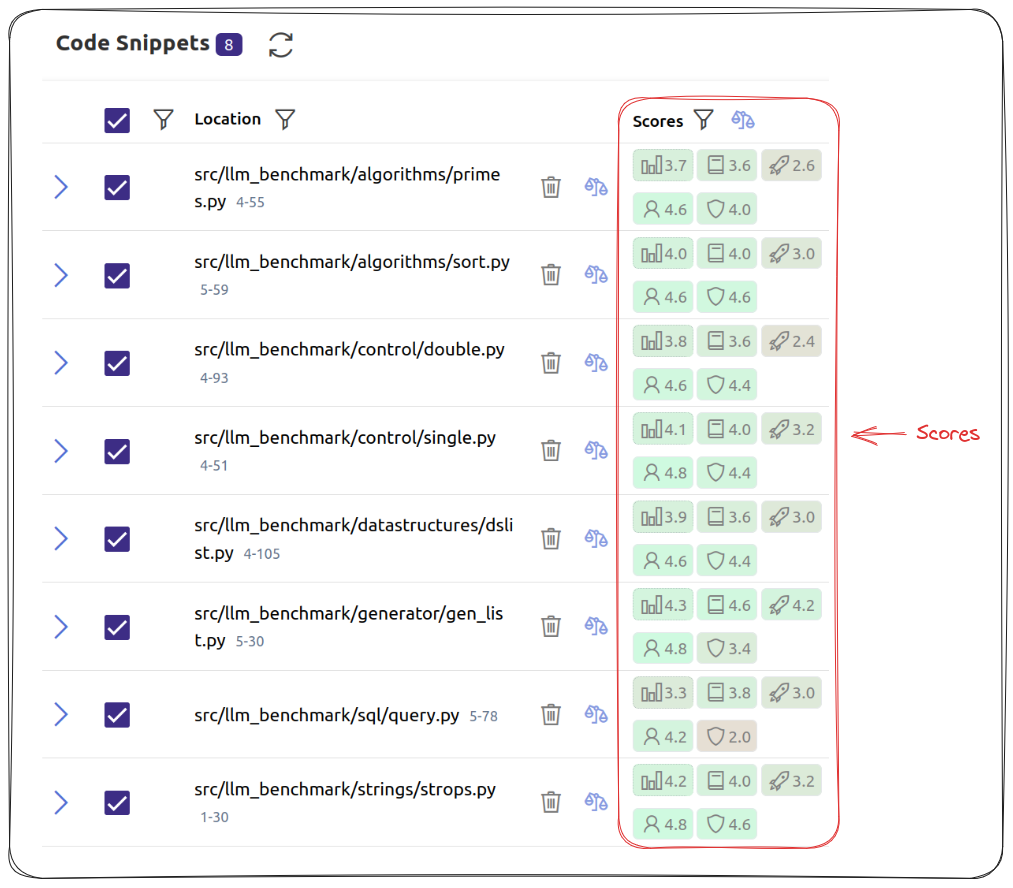

When the scoring task is complete, your scores will appear under the Scores tab. See image below:

Across your scoring tasks, if you define a custom prompt, it will appear with a "human figure outline" icon.

Understanding the Scores

Artemis provides several default scores to help you evaluate different aspects of your code. Here's how to interpret them:

| Score | Explanation |

|---|---|

Optimisation | Evaluates how optimised a piece of code is. |

Quality | Evaluates how good a piece of code is, where good is used more broadly to uncover inefficiencies in the code. |

Security | Evaluates how secure a piece of code is |

Stability | Evaluates code snippets for bugs and other failing points |

Average Score | This is the average of the above scores, for a given piece of code. If you selected multiple LLMs to score your code, the average will also be an average of the different scores provided by each LLM for each of the criteria above. |

Click on the tile of each score to get an explanation of why this score was allocated to the snippet.

Low scores are an indication that your code might need to be reworked. Once you've identified code snippets that need improvement, you can proceed to Generate code versions to get suggestions for how to enhance them.