Build settings

Build settings overview

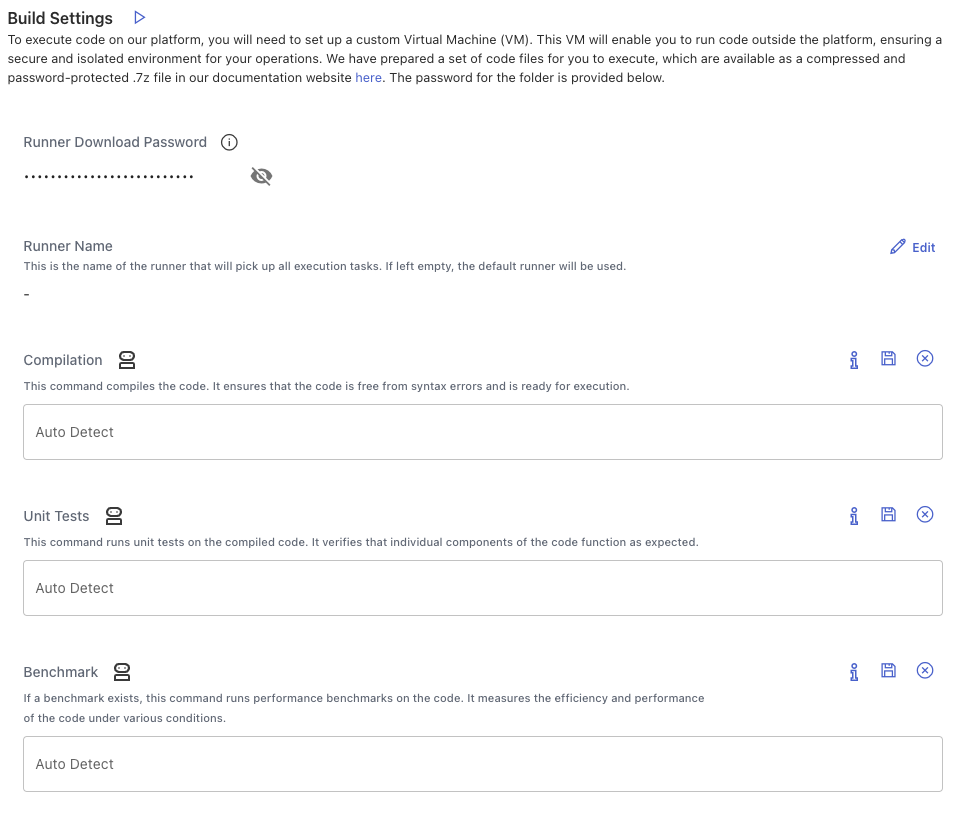

From the build settings page in the Artemis web console you can configure commands to run builds, tests, and benchmarks on a runner.

If you do not already have an Artemis custom runner configured you should consider doing so now before proceeding by following the Custom Runner setup guide

Populating build settings

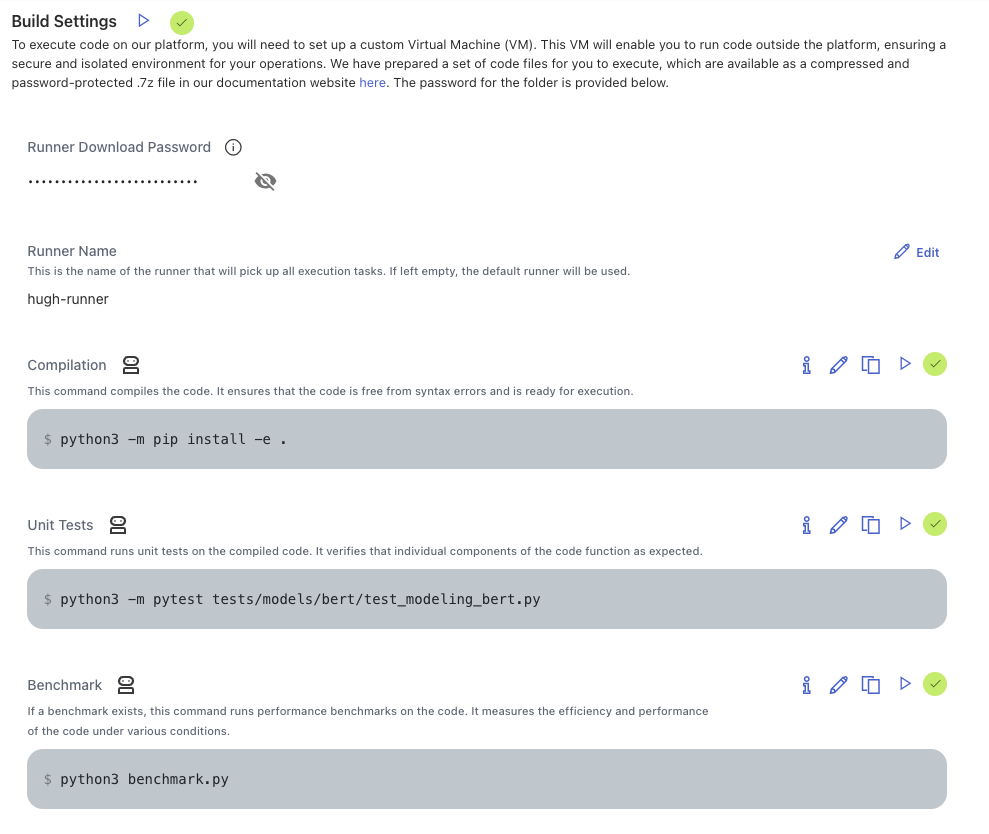

There are five sections on the build settings page, the first is the name of the runner you will be executing your commands on (for instructions on how to configure a custom runner see the Custom Runner documentation), each of the remaining four sections corresponds to a different kind of validation command. You should check these commands work when run in the same environment as your custom runner before population the fields on the build settings page as follows:

Compilation

This command is run before all other commands and is used to build your project. Common examples include:

- Python

python -m pip install -e . - Java

mvn build && mvn install - C

make build

etc

Unit Tests

This command is to ensure new versions of snippets generated by Artemis do not break existing functionality in your project. Common examples include:

- Unit Tests

- Integration Tests

Benchmark

This command is used to measure the performance of versions of snippets generated by Artemis against the original version of the code. Common examples include:

- Performance benchmarks

- Load tests

- Sample use cases

Cleanup

Finally, the optional cleanup command is used to remove any artifacts produced by the previous commands left behind on the runner that might interfere with subsequent runs of the validation steps. Common examples include:

rm -rf buildmvn clean

etc

Generate missing unit tests or benchmarks with Artemis

If your project does not already have unit tests and benchmarks you can use Artemis to generate them. This is as simple as indexing your project and then using Artemis chat to ask Artemis to suggest potential benchmarks and tests. Once you have your new benchmarks and tests you can recreate your project with a branch of your original project that includes these new tests.

Watch the Artemis AI video tutorial on creating and running benchmarks and unit tests using Artemis here: Generate Benchmarks and Unit Tests with Artemis

Testing build settings

Test your newly configured build settings by clicking the Validate Snippets button to the right of the build settings page title.

You should see a green tick appear next to each command after the command is executed successfully on the runner.

Troubleshooting build settings

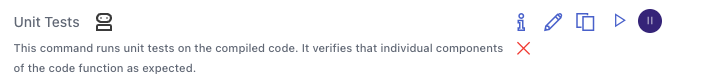

If your command fails click on the X in the red circle to view the logs generated by the command.

Common build settings issues

Commands stuck on pending forever

If your command is stuck with the purple pending symbol like the example below it is likely that you custom runner is not being correctly being picked up by Artemis. Try double-checking your runner name on the build settings page matches the name of your custom runner in the custom runner logs.

If you do not already have a custom runner configured you may be stuck waiting for a runner to become available, in which case you can configure your own custom runner to avoid waiting for a runner to be free.

Next steps

Now you have configured commands to validate generated code you should try generating optimized code versions with Artemis, for instructions on how to do this check out the Code Optimization documentation.