Preliminary code audit

You can use Artemis to retrieve code snippets within your codebase that have most potential for improvement, and score these code snippets to prioritise which snippets would need immediate improvement. See details of these functionalities below:

Select files for analysis

In order to start the analysis, click on the Analyse button on the right corner of your codebase entry in Projects. See image below:

Clicking the Analyse button will take you to a page for filtering code files and content.

This is done in two steps:

Step 1: Filtering by files

Step 2: Filtering by content

See details of each step below:

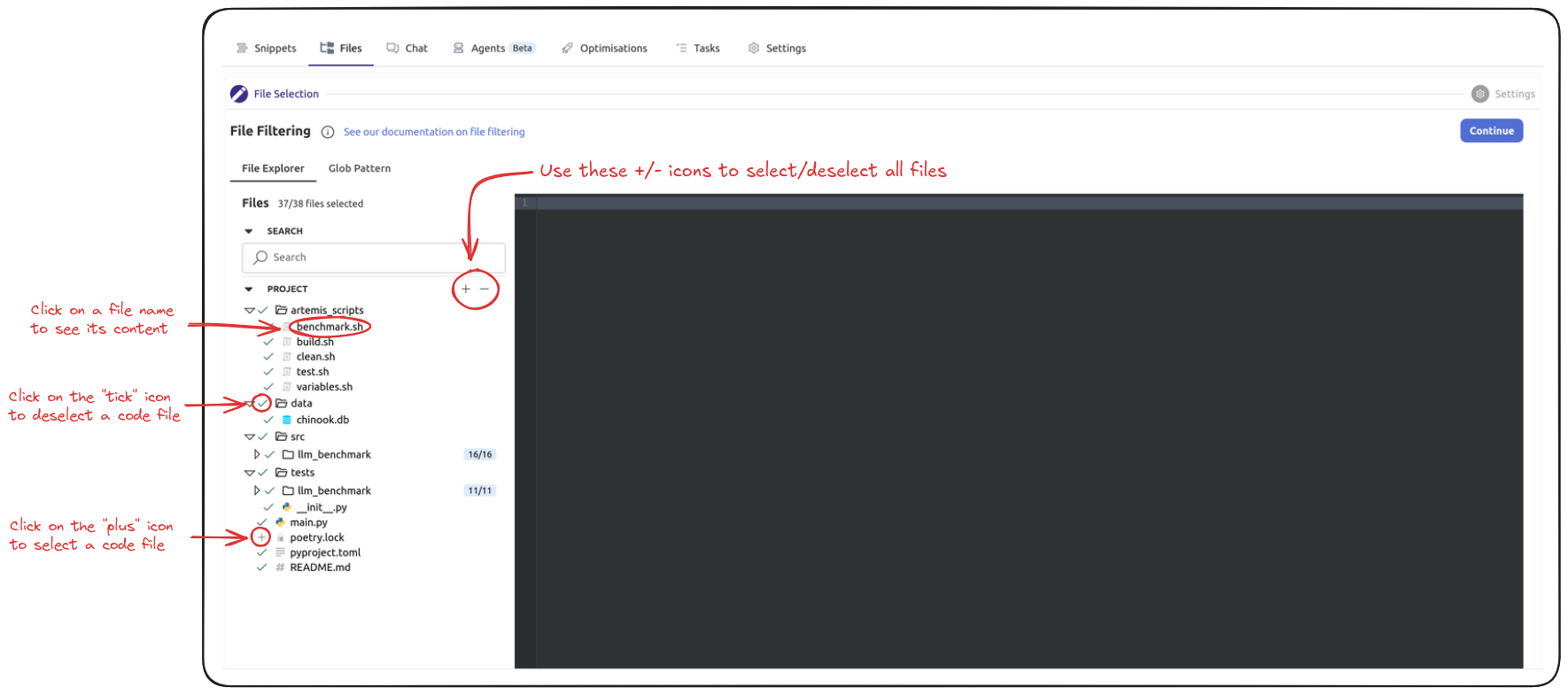

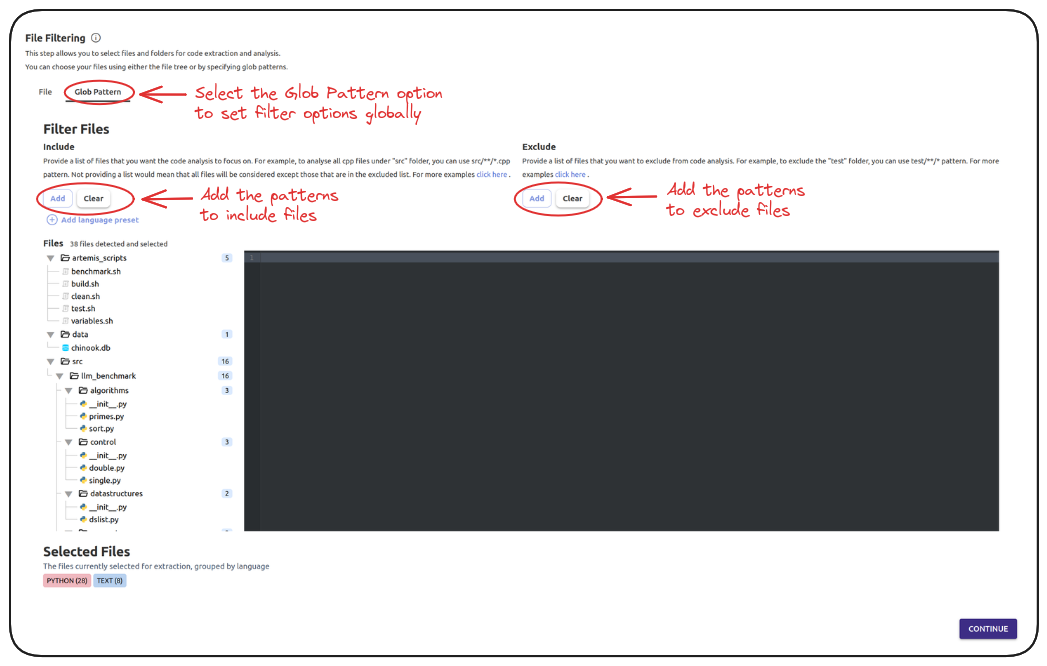

File Filtering: Select the code files you would like to analyse

Under File Filtering, you can choose code files to include or exclude. This can be done either by clicking on the file titles, or with glob patterns.

Option 1: The image below gives the options for viewing and filtering files manually.

Option 2: Alternatively, you can select or exclude a set of code files by their file title, using Glob patterns. See image below for details:

| Language | File Extensions |

|---|---|

| C++ | .cpp, .c, .h, .hpp, .cc, .hh, .cxx, .hxx, .c++, .h++, .cu, .cuh |

| C | .c, .h |

| Java | .java |

| Python | .py |

| Fortran | .f, .for, .f90, .f95, .f03, .f08, .F, .F90 |

| JavaScript | .js, .jsx |

| TypeScript | .ts, .tsx |

| Ruby | .rb |

| PHP | .php |

| C# | .cs |

| Go | .go |

| Swift | .swift |

| Kotlin | .kt, .kts |

| Scala | .scala |

| Rust | .rs |

| Dart | .dart |

| R | .r, .R |

| Lua | .lua |

| Perl | .pl, .pm |

| SQL | .sql |

| Q | .q |

| COBOL | .cob, .cbl, .cpy |

| OCaml | .ml |

| Elixir | .ex |

| Text | .txt, .md, .html, .css, .scss, .vue, .xml, .json, .yaml, .yml, .ini, .log, .conf, .cfg, .tsv, .rst, .tex, .bat, .sh, .pl, .toml, .properties, .gradle, .maven, .cmd, .awk, .env, .helm, .tpl, .kubeconfig, .npmrc, .prettierrc, .eslintrc, .babelrc, .terraformrc, .tfvars, .tf, .editorconfig, .gitignore, .gitconfig, .zshrc, .bashrc, .profile, .flake8, .pylintrc, .coveragerc, .drl, .m, .jl, .vba, .bas, .cls, .frm |

| Unsupported | .csv |

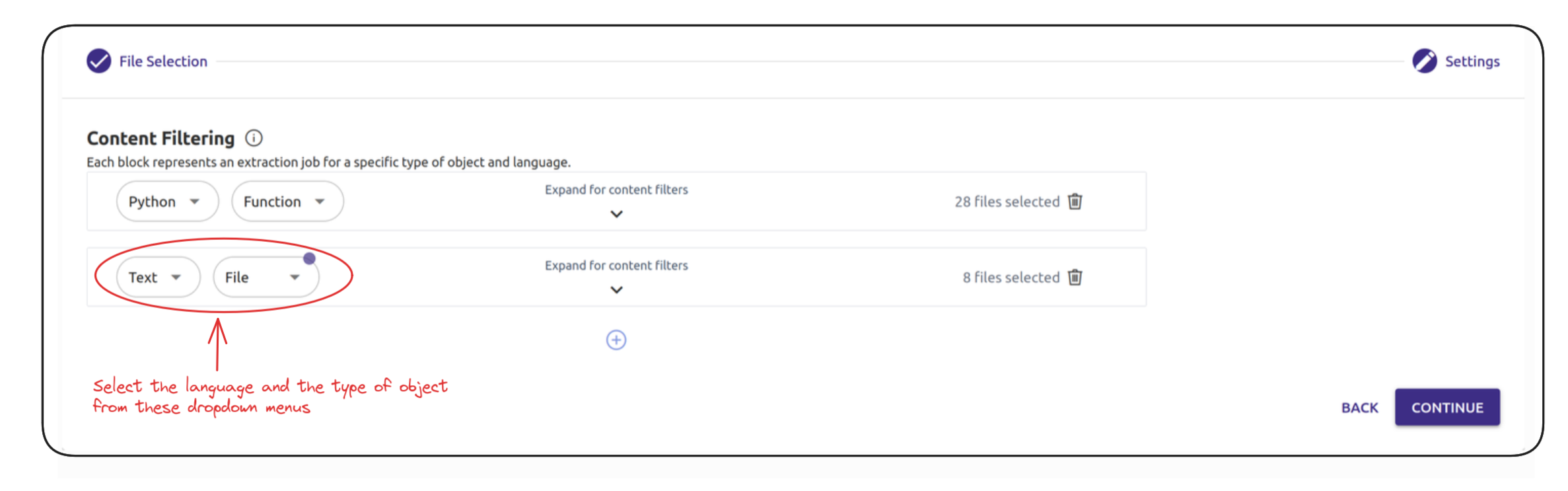

Content Filtering: Select parts of code files

Under Content Filtering, you can choose parts of the codebase to analyse, based on code languages This also includes an option for text. See image below for illustration.

Once you select the coding language, you are able to select which parts of the codebase to further extract. The options available are to extract by: (1) Function, (2) Class, or (3) File, where you can choose the entire code file.

For text-based components of the project, you are only able to select the File. Any additional levels of extractions are not available for text files.

Click on the arrow button below Expand for content filters for further content filtering options.

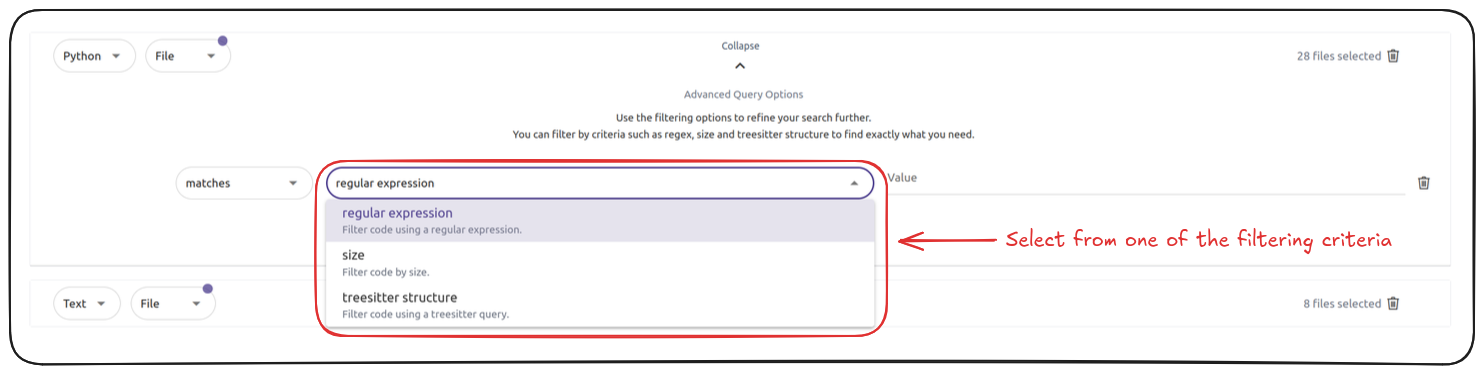

Content Filtering: Further filtering options

Here is an overview of the further content filtering options:

Further content filtering can be done via one of regular expressions, size, or treesitter structure.

Even though Advanced Query Options section only contains the condition, criteria, and the value fields, the logic of the filtering criteria follows from the code language and object dropdowns available at the top. For each of the three options below, we have included a complete example query.

See details of each option below:

(1) regular expression: where you can specify to filter content from files that either match or do not match a regular expression of your choosing.

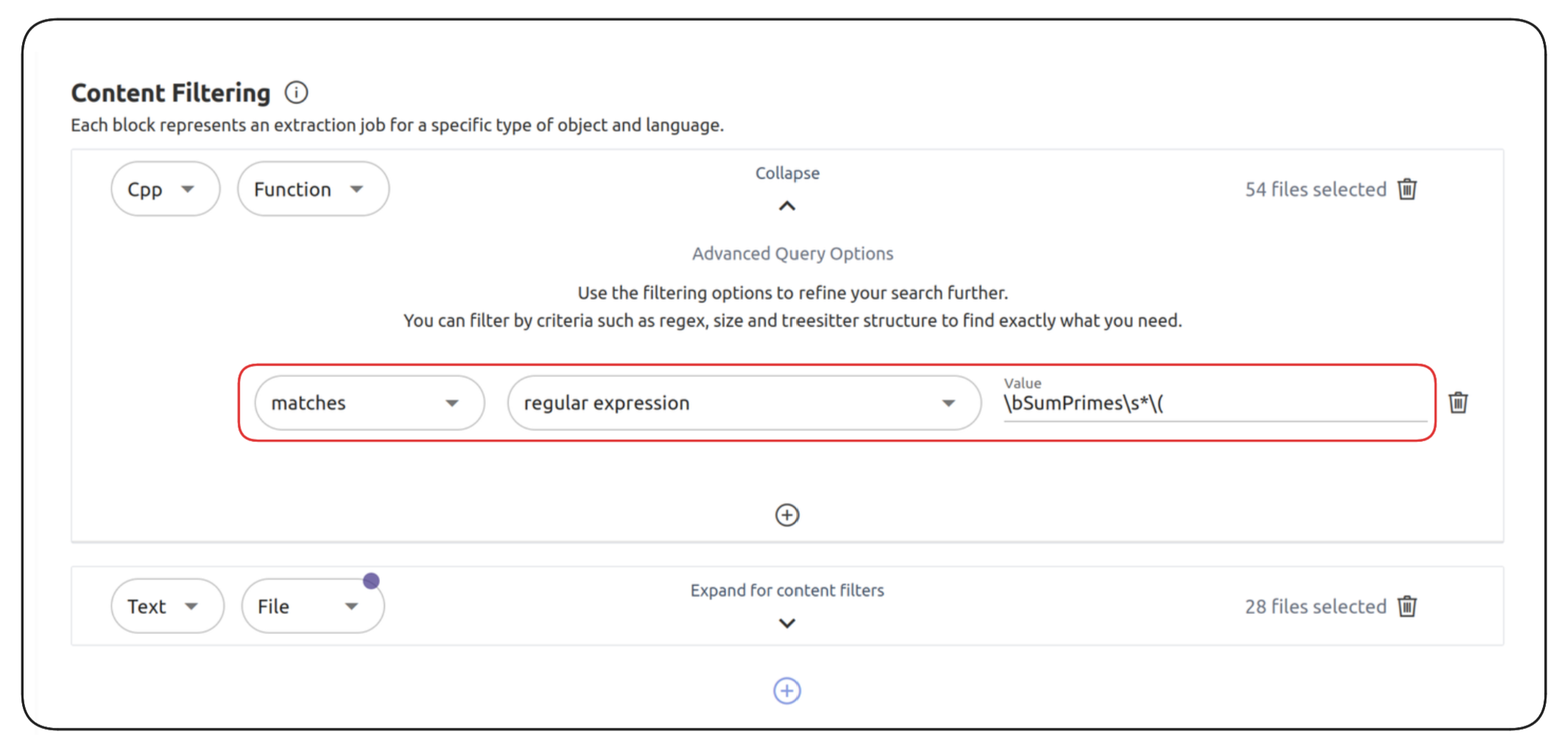

Example: Consider the image below

Here, the regex follows the logic:

Cpp Function matches regular expression \bSumPrimes\s*\(

This means that Artemis will scan the codebase to find any code files containing the function SumPrimes.

(2) size: where you can filter content based on the size of the selected object. The options available to filter are characters, words, or lines.

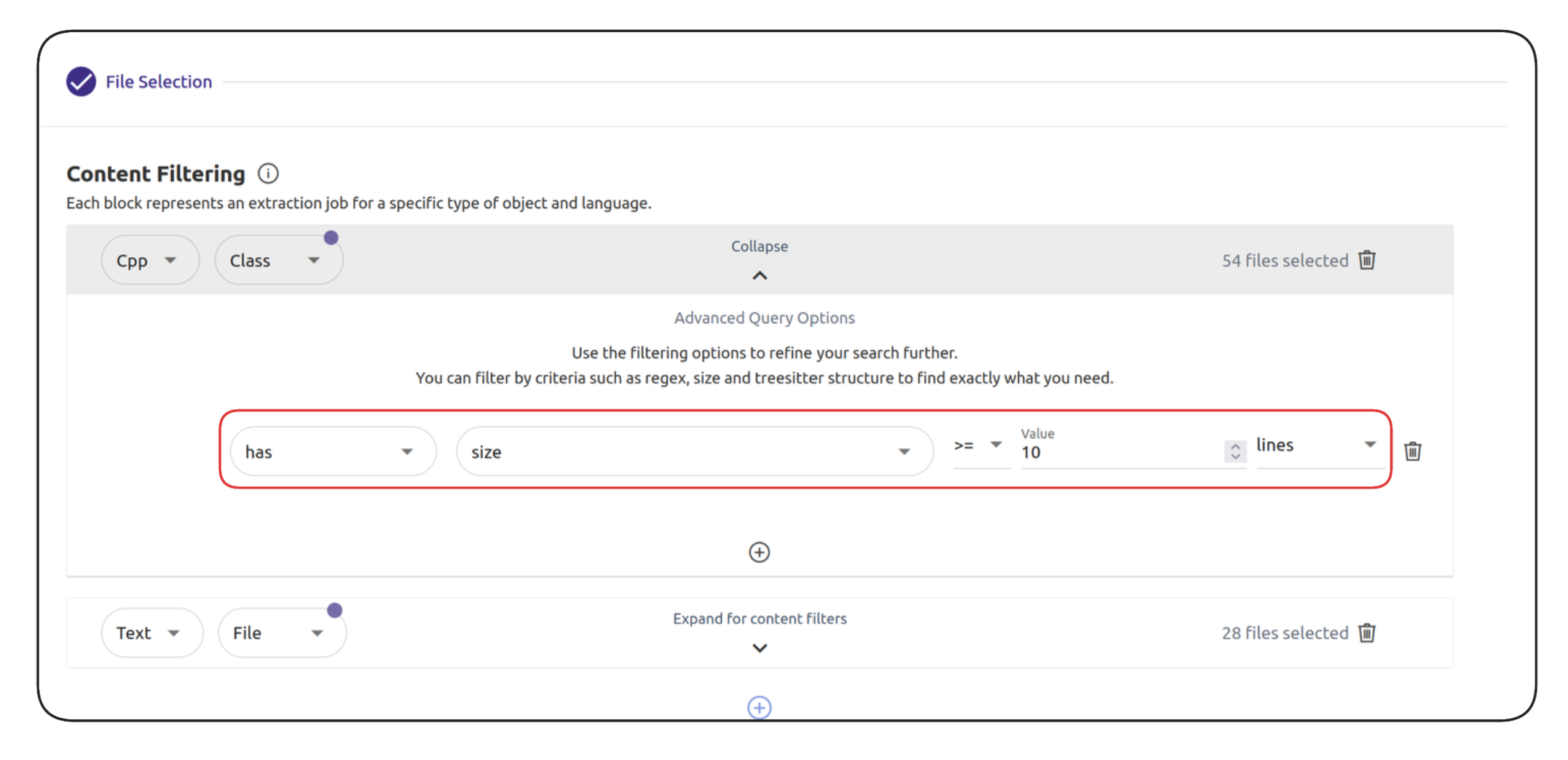

Consider the example below:

Here, the filtering logic is:

Cpp Class has size >= 10 lines

(3) treesitter structure: where you can use a Tree-sitter query to extract loop or nested_loop content from your codebase.

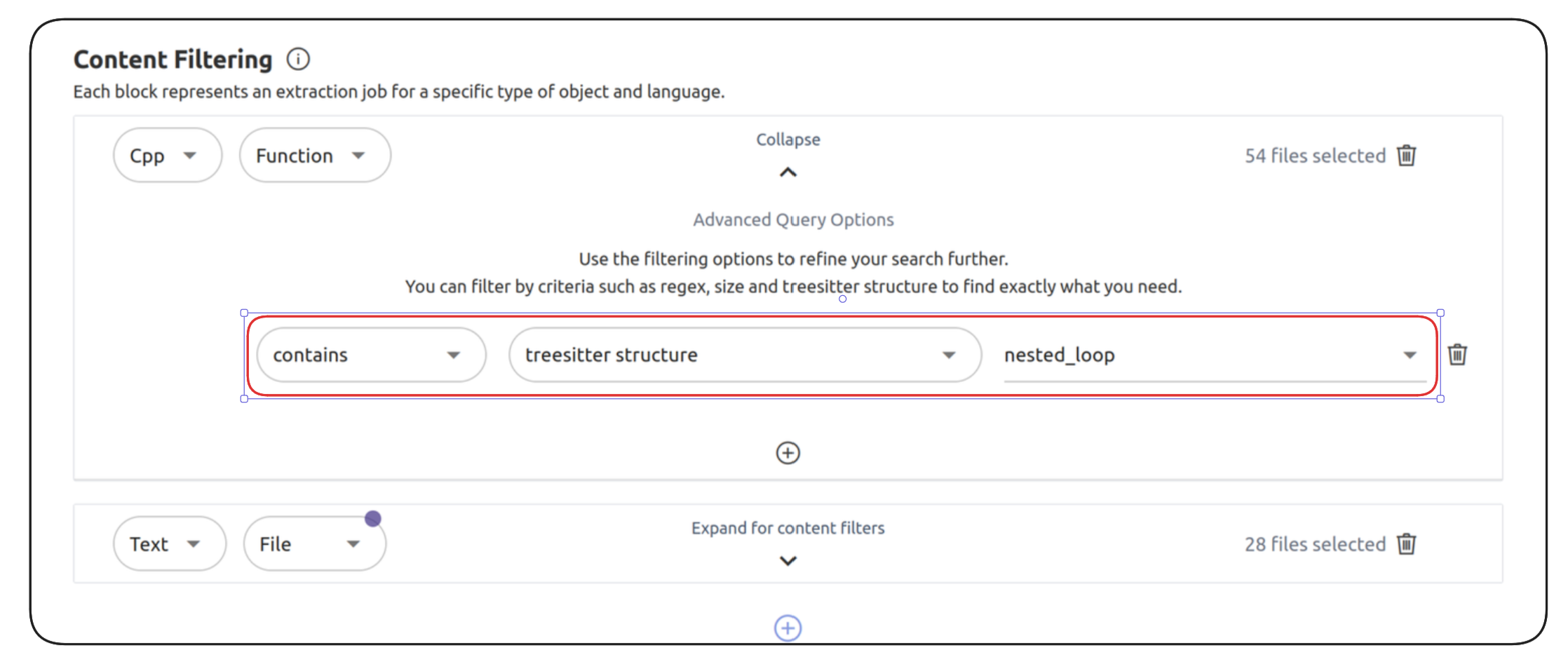

See the image in the example below:

Here, the filtering logic is:

Cpp Function contains treesitter structure nested_loop

For more information on the Treesitter library, please see: Treesitter library

Once you have provided your filtering criteria, press continue, to start extracting the snippets.

Content filtering: Select code snippets with an LLM query

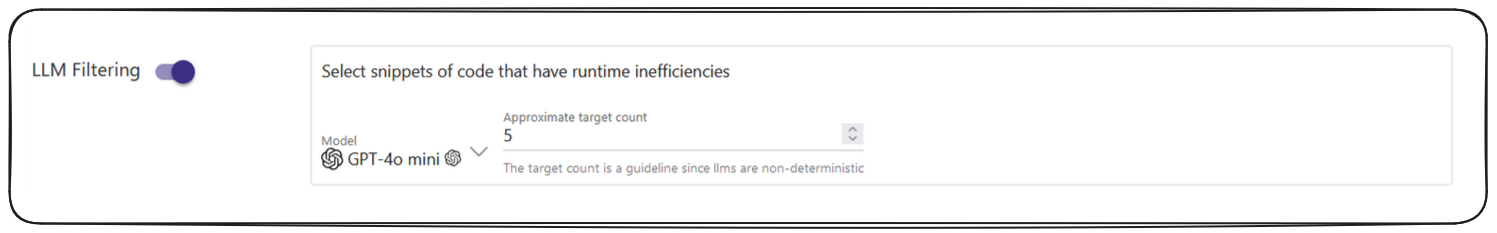

You are also able to use an LLM query to select parts of the codebase that you wish to analyse. In order to do this, turn on the LLM Filtering toggle option, see image below:

If you prefer, specify the target count, to control the number of outputs that the LLM will pick from your codebase. For instance, in the example above, the target count is 5, which means the LLM will pick the top 5 most ineffient snippets of code.

LLM-based filtering may lead to more false positives in code snippet selection, i.e., selection of code snippets that cannot in fact be optimised.

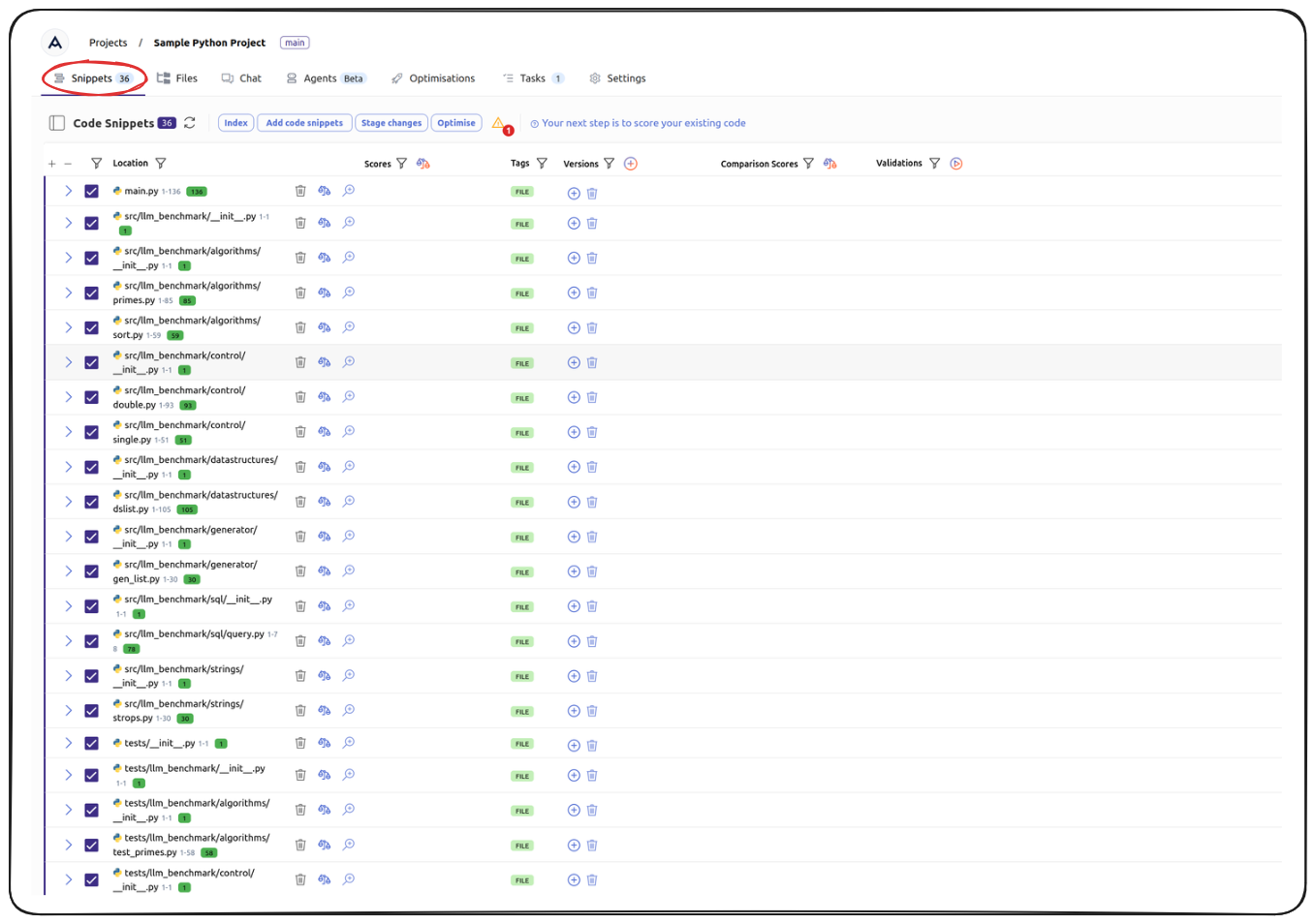

View code snippets

Upon completing the code analysis process, Artemis will extract the code snippets based on the criteria you have defined, and display them under the Snippets tab. See image below:

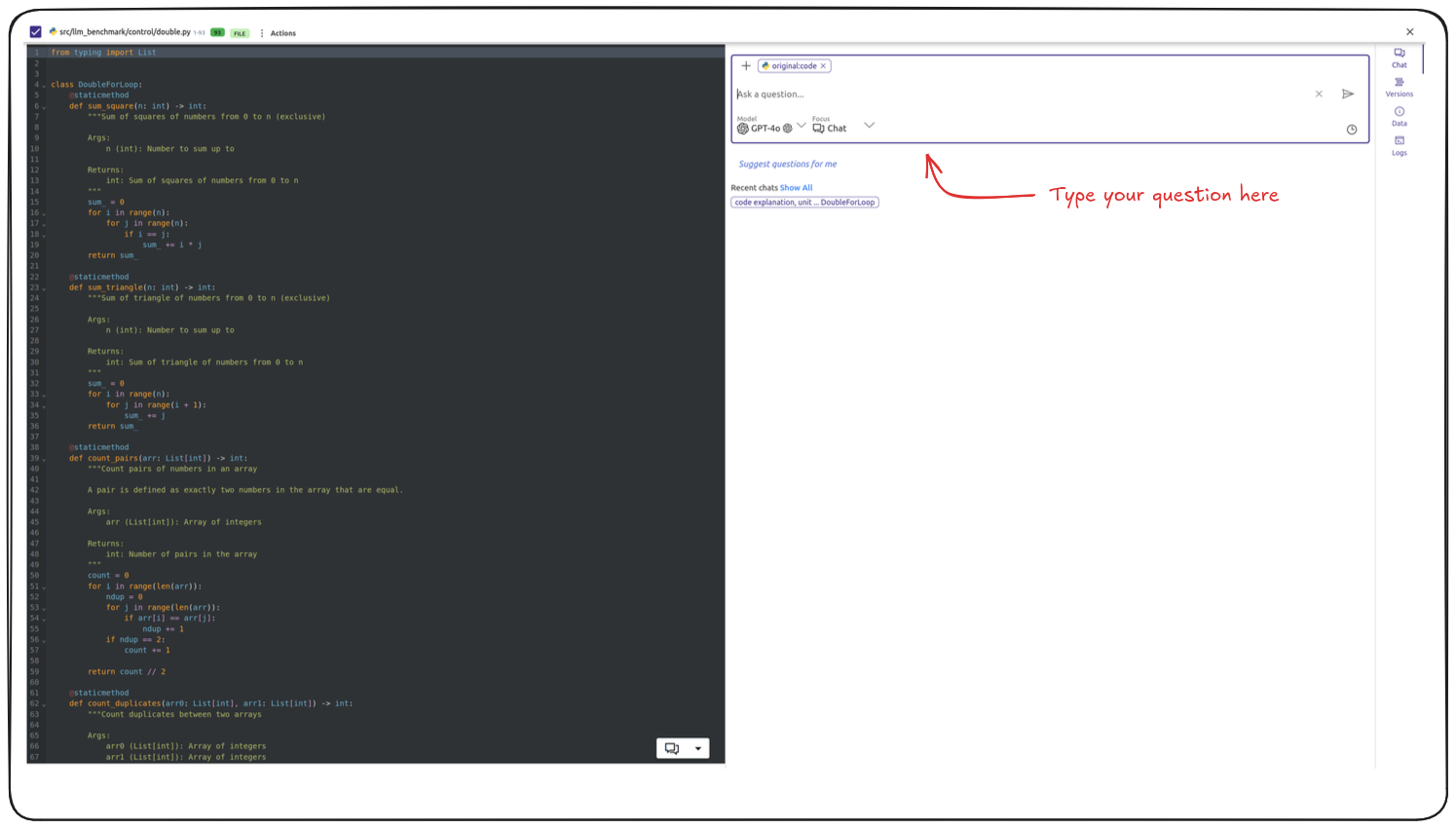

Click on any of the snippets to get details of the snippet. You can also use the chat function to ask questions about the code snippet.

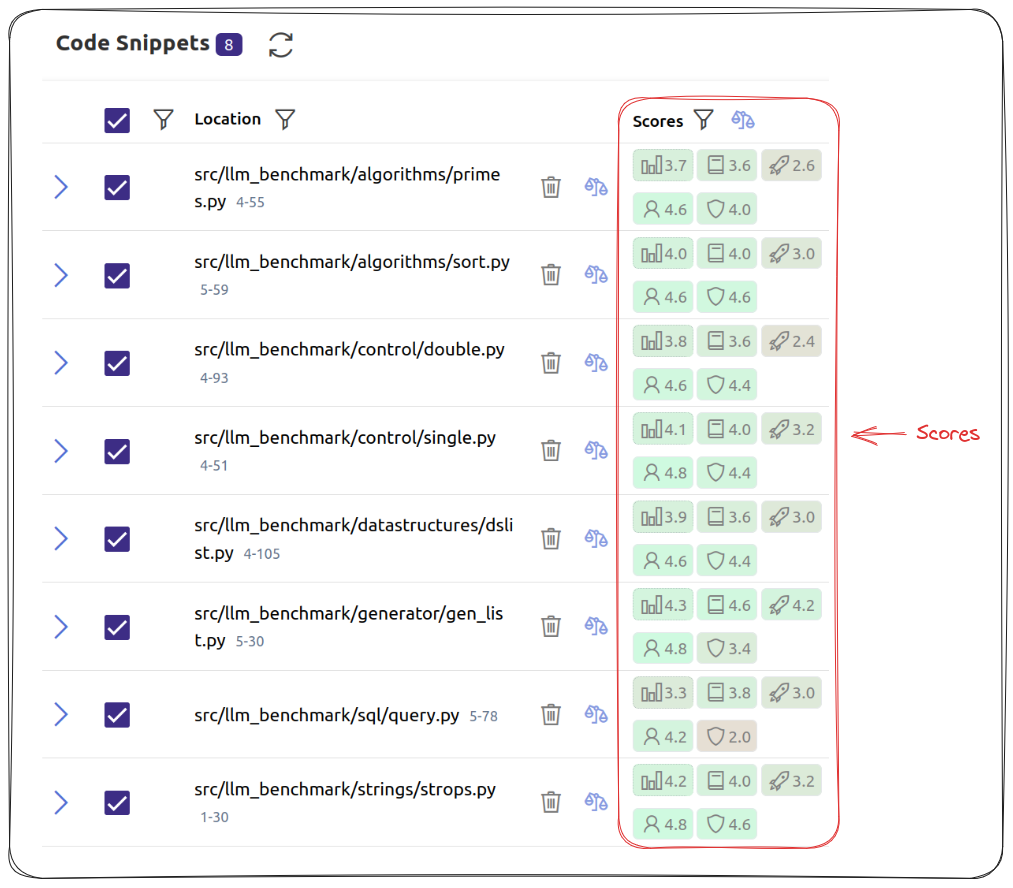

Score code snippets

Once code snippets are retrieved, you can use Artemis scorers to evaluate the quality of snippets based on pre-defined or custom criteria.

The retrieved code snippets can be scored one by one or all at once.

Across the platform, the "balance" icon represents the scoring options. See image:

![]()

To score a selected code snippet, click the balance icon next to that snippet. To score all of the code snippets that you have retrieved, click the balance icon at the top next to the Scores column title.

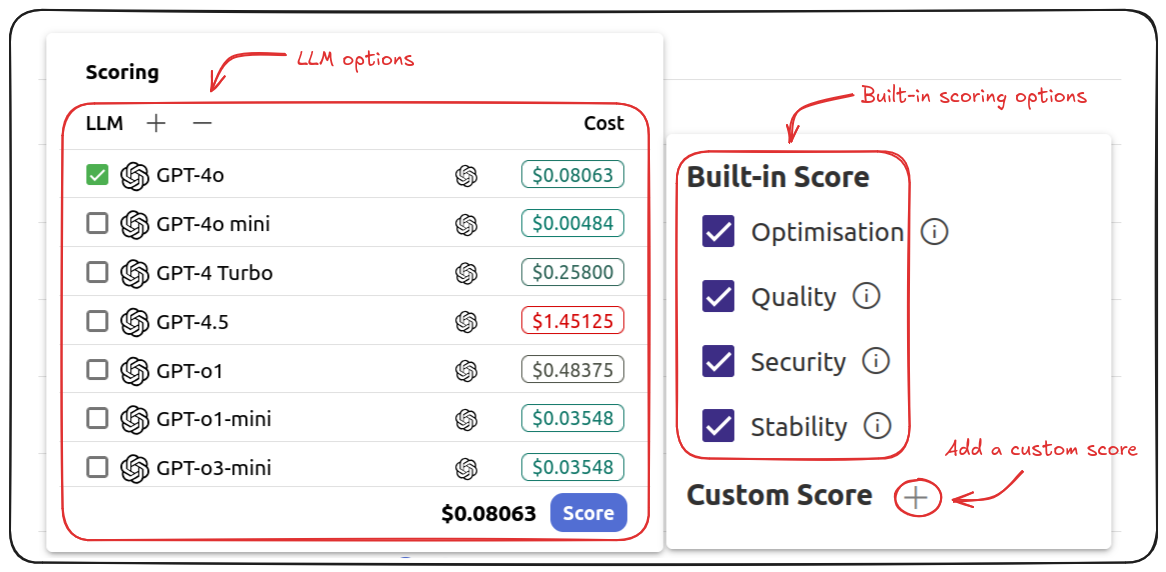

Clicking on the balance icon will give you a list of LLM and scoring criteria. See image for details:

Use the checkboxes under Scoring to select which LLMs you would like to use to generate the scores.

Using certain LLMs will include a cost. Pay attention to the Estimated Cost item at the bottom of the Scoring panel to ensure that you are aware of how much it would cost to run the scoring task.

Use the checkboxes under Built-in Score to select the criteria along which you would like to score your code.

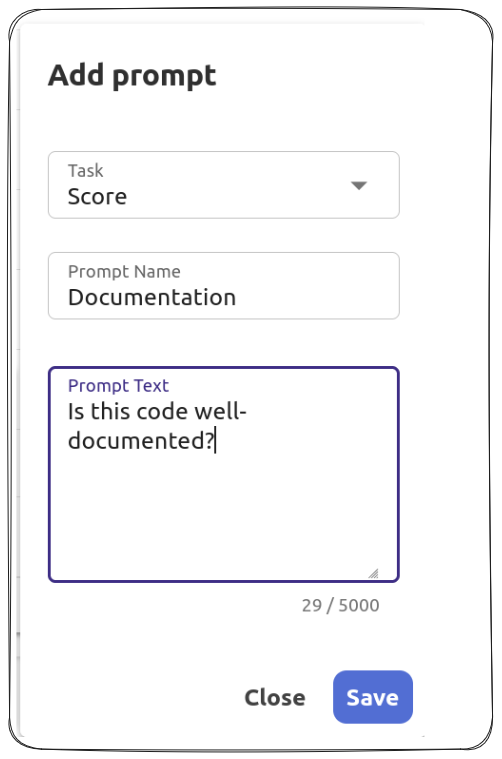

You are also able to define custom scoring metrics by clicking the Add button next to Custom Score.

Set your own Custom Score by giving the score name (for easy identification) and writing the criteria you would like to measure, using natural language prompts.

See the example below:

Once you set your prompts and select the models, click Score at the bottom of the Scoring panel to begin the scoring.

When the scoring task is complete, your scores will appear under the Scores tab. See image below:

Across your scoring tasks, if you define a custom prompt, it will appear with a "human figure outline" icon.

Understanding the scores

Artemis gives three default scores. Here is how you can interpret them:

| Score | Explanation |

|---|---|

Optimisation | Evaluates how optimised a piece of code is. |

Quality | Evaluates how good a piece of code is, where good is used more broadly to uncover inefficiencies in the code. |

Security | Evaluates how secure a piece of code is |

Stability | Evaluates code snippets for bugs and other failing points |

Average Score | This is the average of the above three scores, for a given piece of code. If you selected multiple LLMs to score your code, the average will also be an average of the different scored provided by each LLM for each of the criteria above. |

Click on the tile of each score to get an explanation of why this score was allocated to the snippet.

Low scores are an indication that your code might need to be reworked.

Using Artemis to rework suboptimal code snippets is covered in Generate code recommendations.