Evaluate code recommendations

Once you have generated alternative code recommendations for your selected code snippets, you can use Artemis to evaluate the quality of the code recommendations. Artemis offers code scoring and code validation options, which are discussed below.

Score recommended code snippets

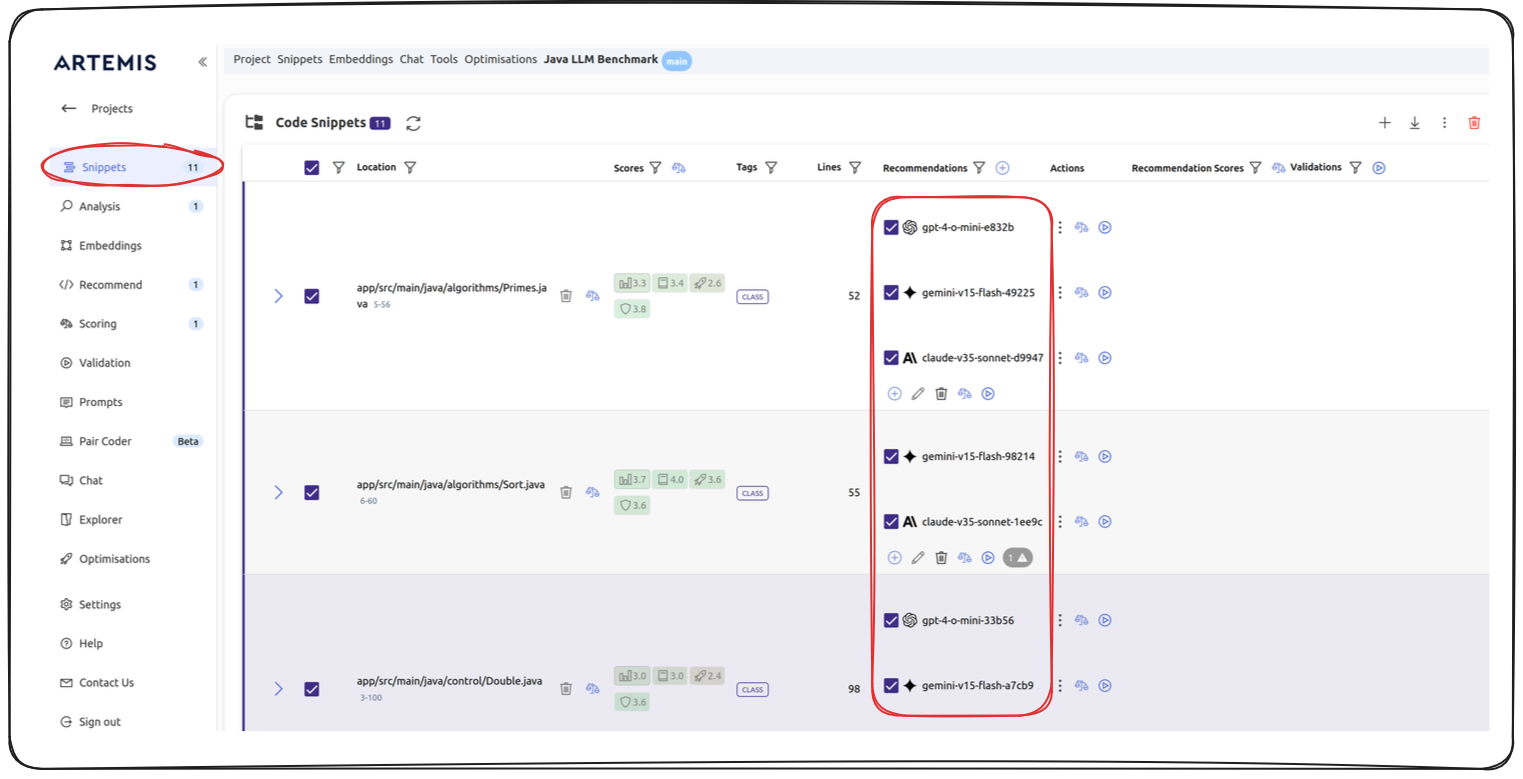

In order to evaluate recommendations, first ensure that you are on the Snippets section of your selected code project, and that you have generated at least one recommendation for your codebase. See image below:

To start the scoring, click on the "balance" icon, either next to a given code snippet, or at the top of the Recommendation Scores column.

Across the platform, the "balance" icon represents the scoring options. See image:

![]()

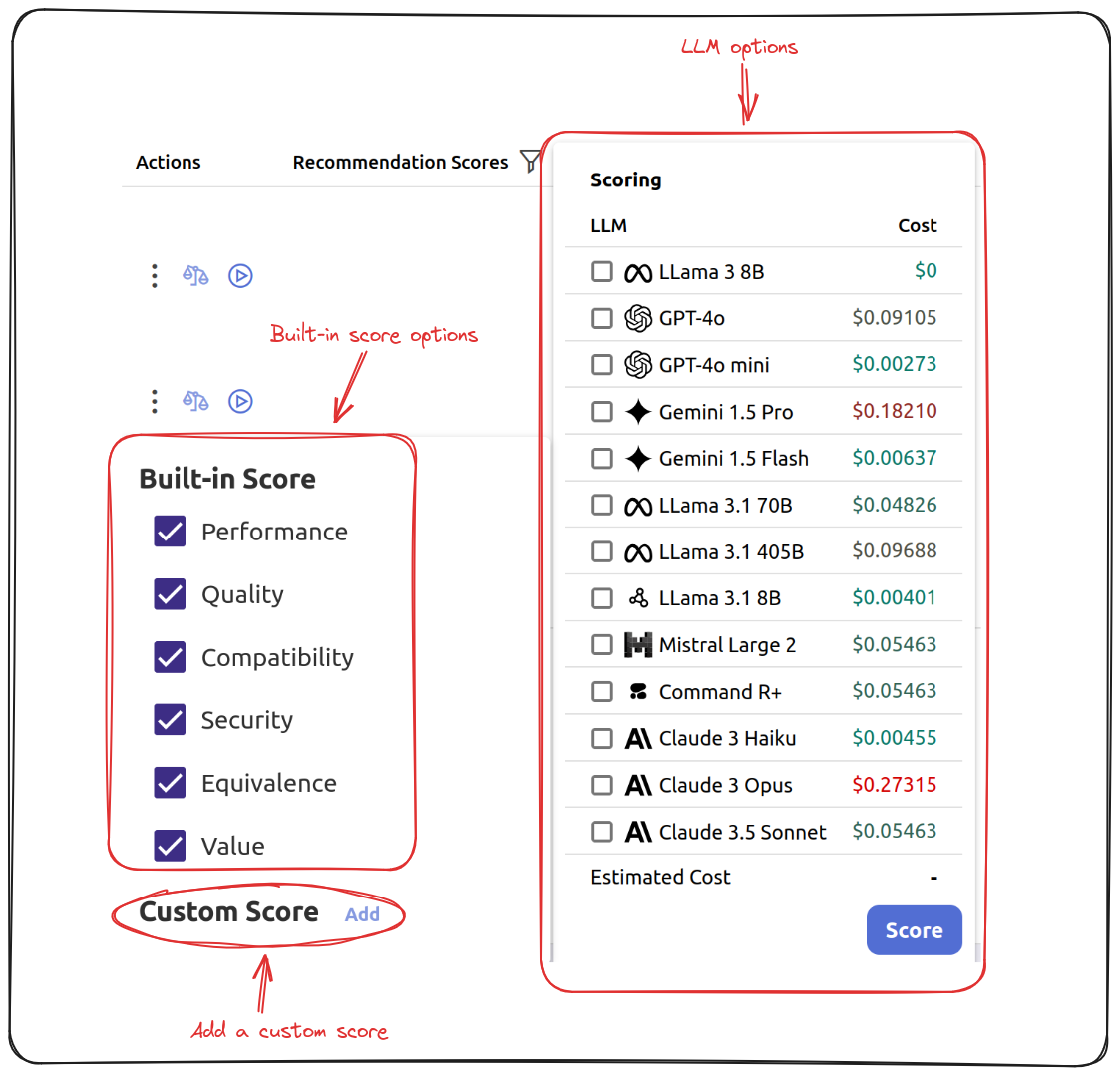

Clicking on the balance icon will give you a list of LLM and scoring criteria. See image for details:

Use the checkboxes under Scoring to select which LLMs you would like to use to generate the scores.

Using certain LLMs will include a cost. Pay attention to the Estimated Cost item at the bottom of the Scoring panel to ensure that you are aware of how much it would cost to run the scoring task.

Use the checkboxes under Built-in Score to select the criteria along which you would like to score your code.

You are also able to define custom scoring metrics by clicking the Add button next to Custom Score.

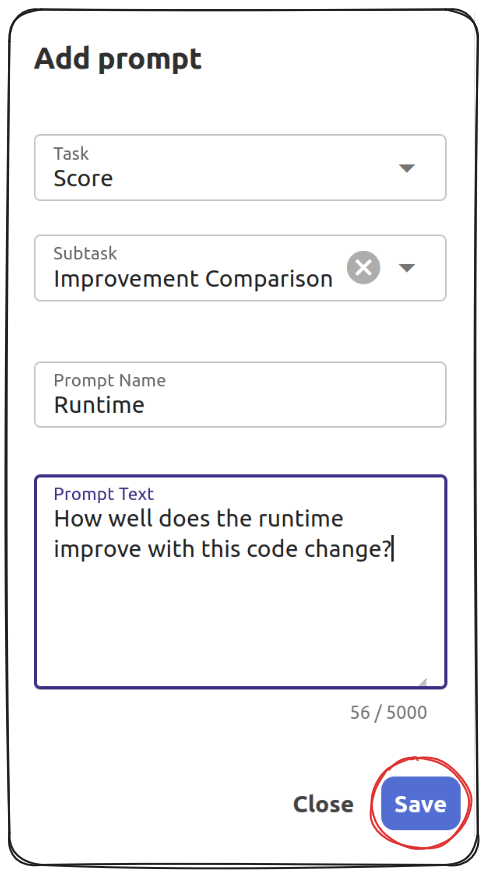

Set your own Custom Score by specifying the Task, Subtask, Prompt Name and the Prompt Text in the given spaces.

See the example below:

Once you click Save the prompt will appear under the Custom Scores.

Once you select the preferred models and scores, click Score under Scoring.

When the scoring task is complete, your scores will appear under the Recommendation Scores tab.

Across your scoring tasks, if you define a custom prompt, it will appear with a "human figure outline" icon.

Interpreting the scores

Artemis gives six default scores. Here is how you can interpret them:

| Score | Explanation |

|---|---|

Performance | Measures how well the code recommendation improve the predicted performance of the piece of code. Performance considers runtime and memory usage as criteria to measure performance, but these are not the only two metrics. |

Quality | Measures how well the suggested code improves code quality, readability, and maintainability of the piece of code. |

Compatibility | Checks whether the the recommended change contains backward compatibility issues. |

Security | Checks whether the code changes are secure, and avoids any security issues. |

Equivalence | Checks whether the changes preserve the functioning of the code, and whether the general logic of the suggested piece of code is the same as the original piece of code. |

Value | Measures how much value the suggested code snippet brings to the project. |

Average Score | This is an average of the above six metrics. If you selected multiple LLMs for the scoring task, then the `Average Score |

Click on the tile of each score on Artemis to get an explanation of why this score was allocated to the snippet.

High scores are an indication that recommended code may be better than your original code snippets.

Validate recommended code snippets

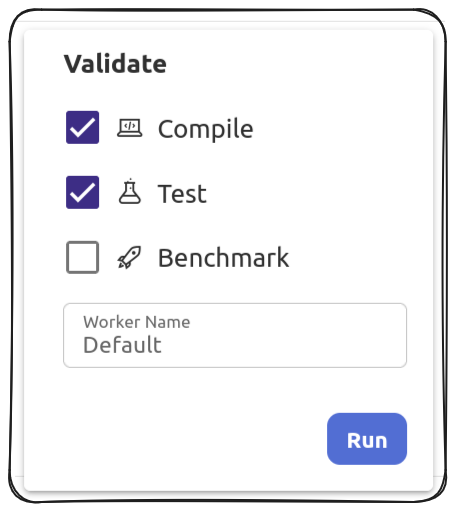

Artemis provides three options to validate the recommended code snippets: Compile checks whether the recommended code will compile, Test runs unit test to check for functionality, Benchmark measures the performance. In order to run these validations, click the "play" icon next to Validations, and click on the preferred tests. Click Run to start the validation process. See image below:

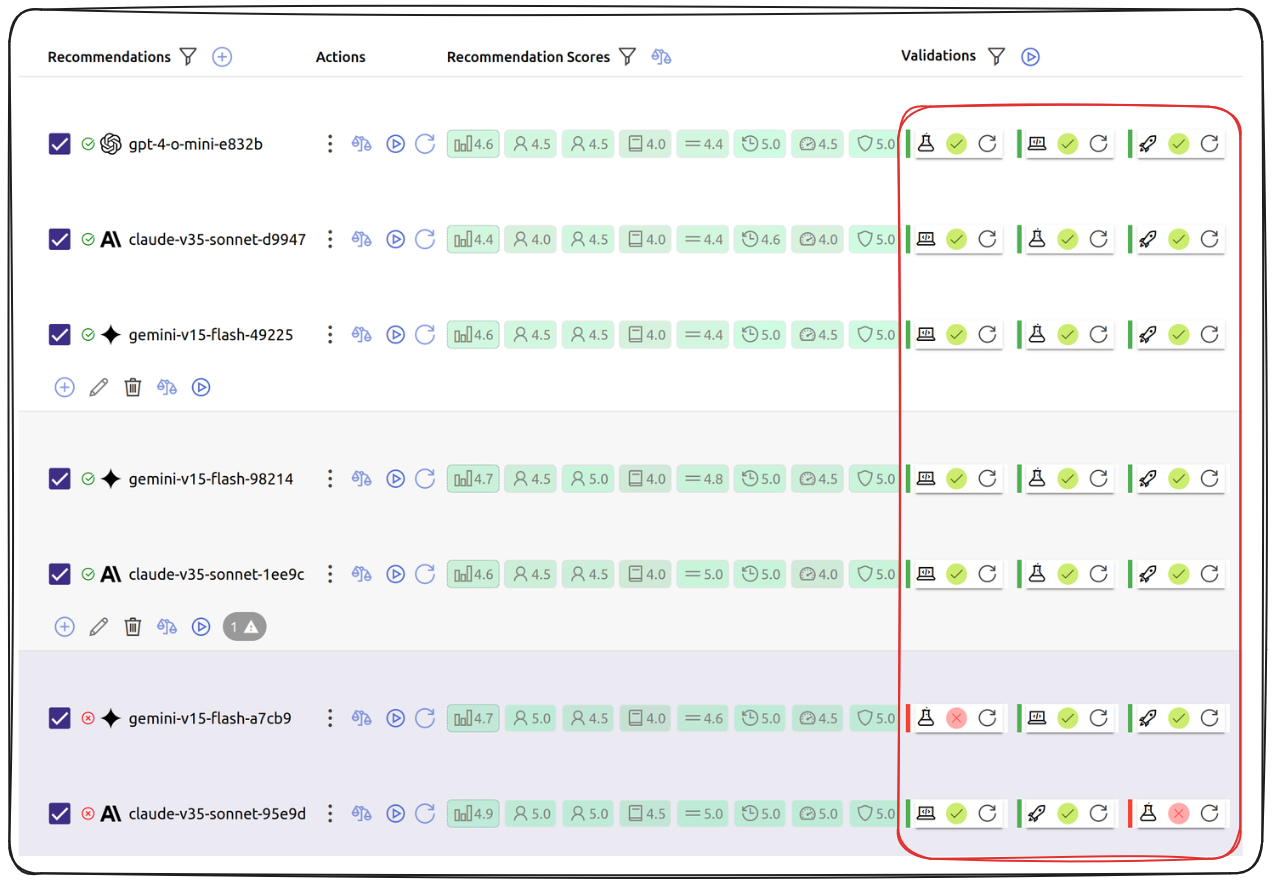

Once the validation process is complete, the status of each selected test will be displayed under Validations. See image below:

Based on code scores and validation outcomes, you can decide which of the snippets to incorporate into your codebase.