Building Optimisation Agents with Artemis

Author: Jingzhi Gong

How can we build an agent to optimise the performance of software with Artemis? Performance optimisation presents a unique challenge in the landscape of AI-assisted programming. Unlike adding features or fixing bugs, optimisation requires deep understanding of algorithmic complexity, data structures, and system bottlenecks. An agent must not only identify where performance problems exist but also devise creative solutions that maintain correctness while achieving measurable speedups.

The challenge becomes even more complex when we consider the vast search space of possible optimisations. Should the agent focus on algorithmic improvements? Memory efficiency? Parallelisation? Cache optimisation? Each codebase has its own unique characteristics and bottlenecks. What works brilliantly for one project might be irrelevant for another.

This is where intelligent optimisation strategies become crucial. Rather than relying on brute-force approaches or generic heuristics, we need agents that can systematically explore the problem space and evolve their strategies based on what works. An agent's approach to optimisation matters as much as its raw capabilities.

In this post we'll use the SWE-Perf benchmark to show how genetic algorithms can evolve effective optimisation strategies, demonstrating that systematic prompt engineering through Artemis Intelligence can unlock significant performance gains even with a single language model.

What is SWE-Perf?

SWE-Perf [He et al., 2024] is a benchmark that evaluates AI agents on 140 performance optimisation instances across 9 major open-source Python projects (including astropy, requests, scikit-learn, and others). Each instance presents a specific performance bottleneck with pre-defined test cases, challenging agents to generate git patches that improve runtime efficiency while maintaining correctness.

Our Approach

Agent Framework

We implemented mini-SWE-agent, a lightweight agent framework designed for autonomous coding tasks. Mini-SWE-agent is a small agent framework that uses an LLM to generate and execute shell actions to autonomously do coding tasks. It solves problems by asking the model what to do next, running that instruction (for example, reading/editing files, running tests), looking at what happened, and repeating steps. We selected this framework because it offers:

- Straightforward integration with SWE-Perf benchmark workflow

- Uses only one base LLM, avoiding expensive optimisation costs for large real-world projects

- Standardised APIs to be used with the available LLM modules implemented in our company

Base LLM

We used Claude 3.5 Sonnet as our single language model, chosen for its strong reasoning capabilities and code understanding.

Agent Optimisation Strategy

We employed zero-shot optimisation with Artemis Intelligence, where Artemis uses genetic algorithms to iteratively explore and refine agent prompt configurations. This approach allows us to:

- Automatically discover effective prompt patterns without manual tuning

- Evolve optimisation strategies through iterative improvement

- Balance cost vs performance in agent optimisation

Optimised vs Original Agent

The optimised agent incorporates several strategic improvements over the original agent:

-

Enhanced Performance Focus: The optimised agent emphasises bold, high-impact algorithmic optimisations rather than incremental tweaks, with explicit instructions to target the "single most critical performance bottleneck" and implement "substantial code changes within one function or loop."

-

Improved Optimisation Strategy: The optimised agent provides detailed guidance on algorithmic improvements (reducing time complexity, eliminating unnecessary loops), data structure upgrades (using hash tables, sets), and computation consolidation (caching, hoisting).

-

Better Constraint Handling: The optimised agent includes more sophisticated anti-gaming rules and clearer boundaries between what constitutes "surgical" file editing versus "bold" conceptual changes.

-

Streamlined Workflow: The optimised agent simplifies the submission process and provides clearer step-by-step optimisation methodology with specific performance analysis checklists.

Results

Our optimised agent delivered substantial project-level performance improvements, even when the overall benchmark averages appeared modest.

Notable Project-Level Successes

psf/requests (2 instances): Our most successful optimisation target

-

Original: 36.1% performance speedup

-

Optimised: 43.3% performance speedup

-

Artemis Intelligence Gain: +20% relative improvement

scikit-learn/scikit-learn (32 instances): Consistent improvements

-

Original: 3.5% performance speedup

-

Optimised: 4.5% performance speedup

-

Artemis Intelligence Gain: +29% relative improvement

astropy/astropy (12 instances): Steady performance gains

-

Original: 2.9% performance speedup

-

Optimised: 4.7% performance speedup

-

Artemis Intelligence Gain: +62% relative improvement

These results highlight that the optimised agent isn’t just nudging averages — it’s unlocking meaningful speedups in major, widely used projects, where even a few percentage points can have real-world impact.

Full-Scale Benchmark Results (140 Instances)

Across the entire SWE-Perf benchmark:

- Original Agent: 5.0% average performance speedup (92.1% apply rate, 87.9% correctness)

- Optimised Agent: 5.5% average performance speedup (92.1% apply rate, 87.9% correctness)

- Artemis Intelligence Gain: 10% relative improvement over the original agent

Case Study: astropy/astropy Optimisation

The astropy/astropy instance demonstrates how our optimised agent achieved substantial performance gains through comprehensive algorithmic improvements. The agent identified and optimised the report_diff_values() function in astropy/utils/diff.py, which is critical for efficient array and string comparison operations.

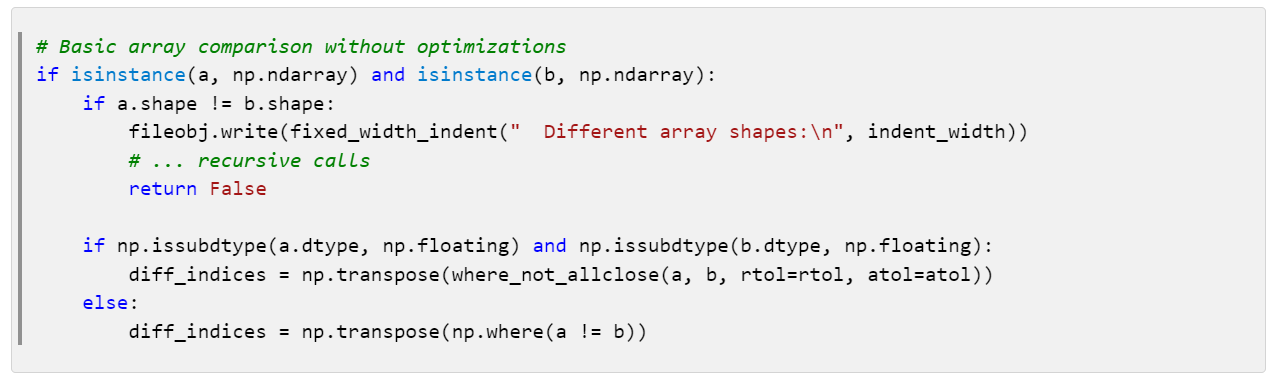

Original Code

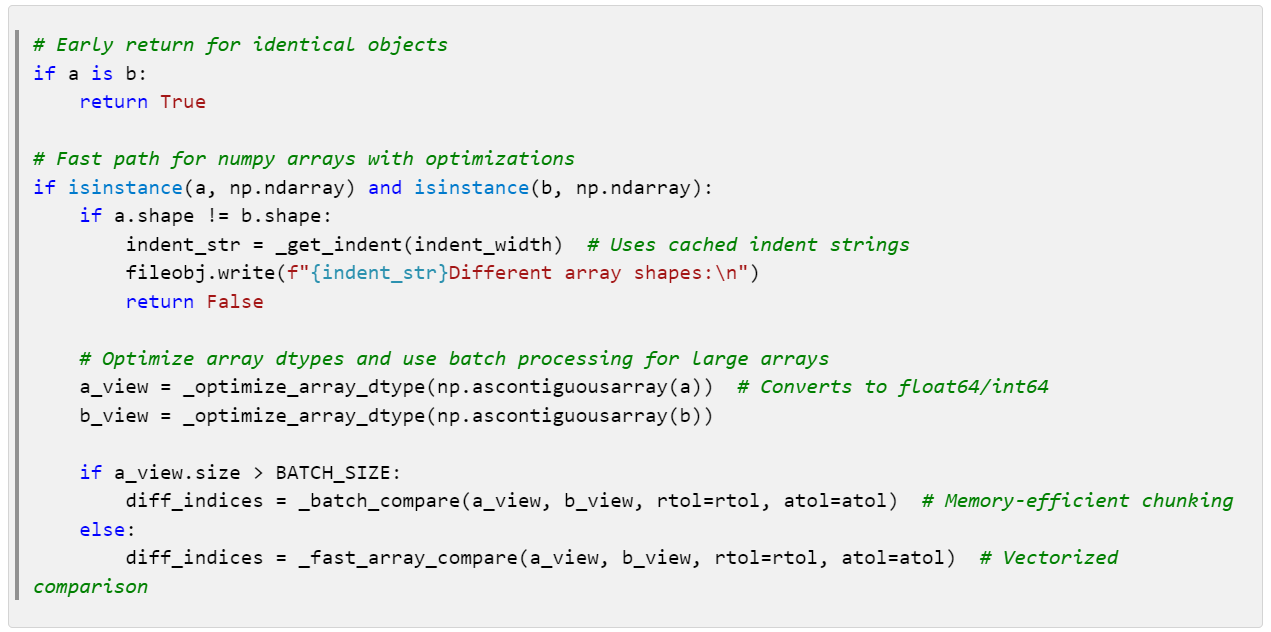

Optimised Code

Key Optimisations

-

Early identity check: Added

if a is b: return Trueto skip processing for identical objects -

Cached string operations: Replaced

fixed_width_indent()calls with cached_get_indent()function -

Optimized array dtypes: Pre-convert arrays to float64/int64 for faster NumPy operations

-

Batch processing: Process large arrays (>1M elements) in chunks to prevent memory overflow

-

Vectorized comparisons: Use specialized

_fast_array_compare()for different data types -

Memory efficiency: Use

np.ascontiguousarray()for better cache performance

Conclusion

The agent optimisation case study demonstrates how targeted, systematic approaches often outperform brute-force optimisation, especially when combined with evolutionary prompt engineering and domain-specific knowledge. By using Artemis Intelligence to evolve our agent's prompting strategy, we achieved substantial project-level gains in libraries like scikit-learn, requests, and astropy, where improvements of 20–60% relative speedup matter to real-world users.

These standout results show that smaller, focused models with optimised instructions can compete effectively with larger, more expensive alternatives in production environments [Belcak et al., 2024]. Even when overall averages appear incremental, the project-level impact reveals the true value of agentic optimisation with Artemis.

References

Belcak, P., Heinrich, G., Diao, S., Fu, Y., Dong, X., Muralidharan, S., Lin, Y. C., & Molchanov, P. (2024). "Small Language Models are the Future of Agentic AI." arXiv preprint arXiv:2406.02153.

He, X., Liu, Q., Du, M., Yan, L., Fan, Z., Huang, Y., Yuan, Z., & Ma, Z. (2024). "SWE-Perf: Can Language Models Optimize Code Performance on Real-World Repositories?" arXiv preprint arXiv:2407.12415.