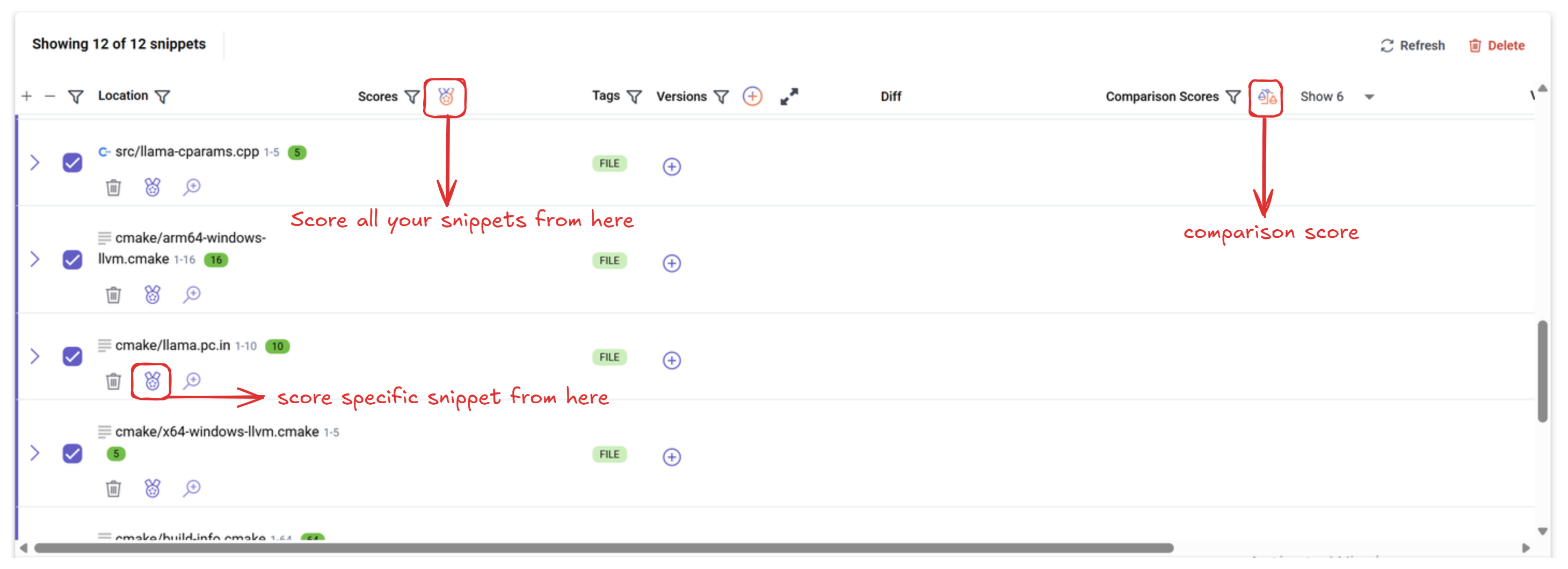

Score your targets

After analyzing your codebase and extracting targets, the next step is to score them. Scoring helps you understand which parts of your code would benefit most from optimization or improvement.

Step 1: Open scoring options

Across the platform, the balance icon represents scoring.

- Click the

balance iconon any target to begin scoring.

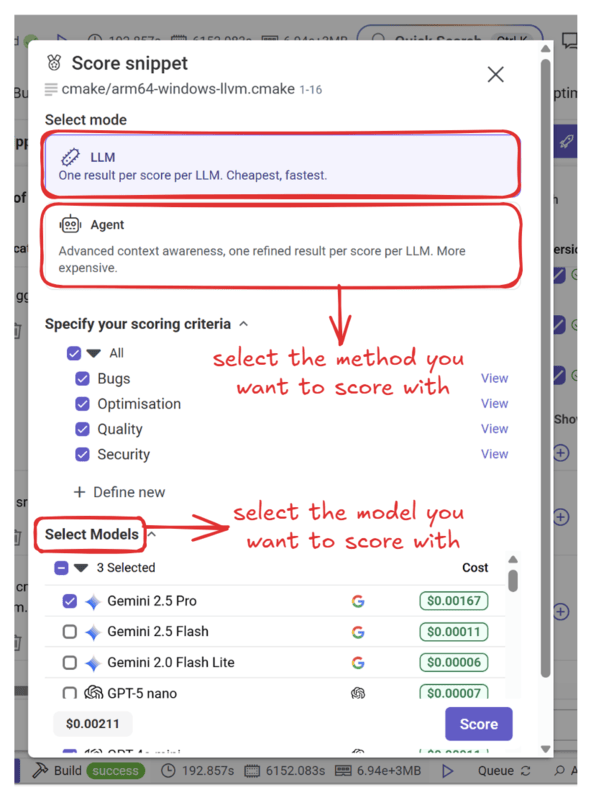

Step 2: Choose scoring method

You’ll see a list of available LLMs and criteria. Select either:

-

LLM → One result per score per LLM. Cheapest, fastest.

-

Agent → Adds advanced context awareness, refining results per LLM. More expensive.

Some models incur costs. Always check the Estimated Cost at the bottom of the panel before starting a scoring task.

Step 3: Define criteria

You can either:

-

Use built-in metrics (e.g. Optimisation, Quality, Security, Stability).

-

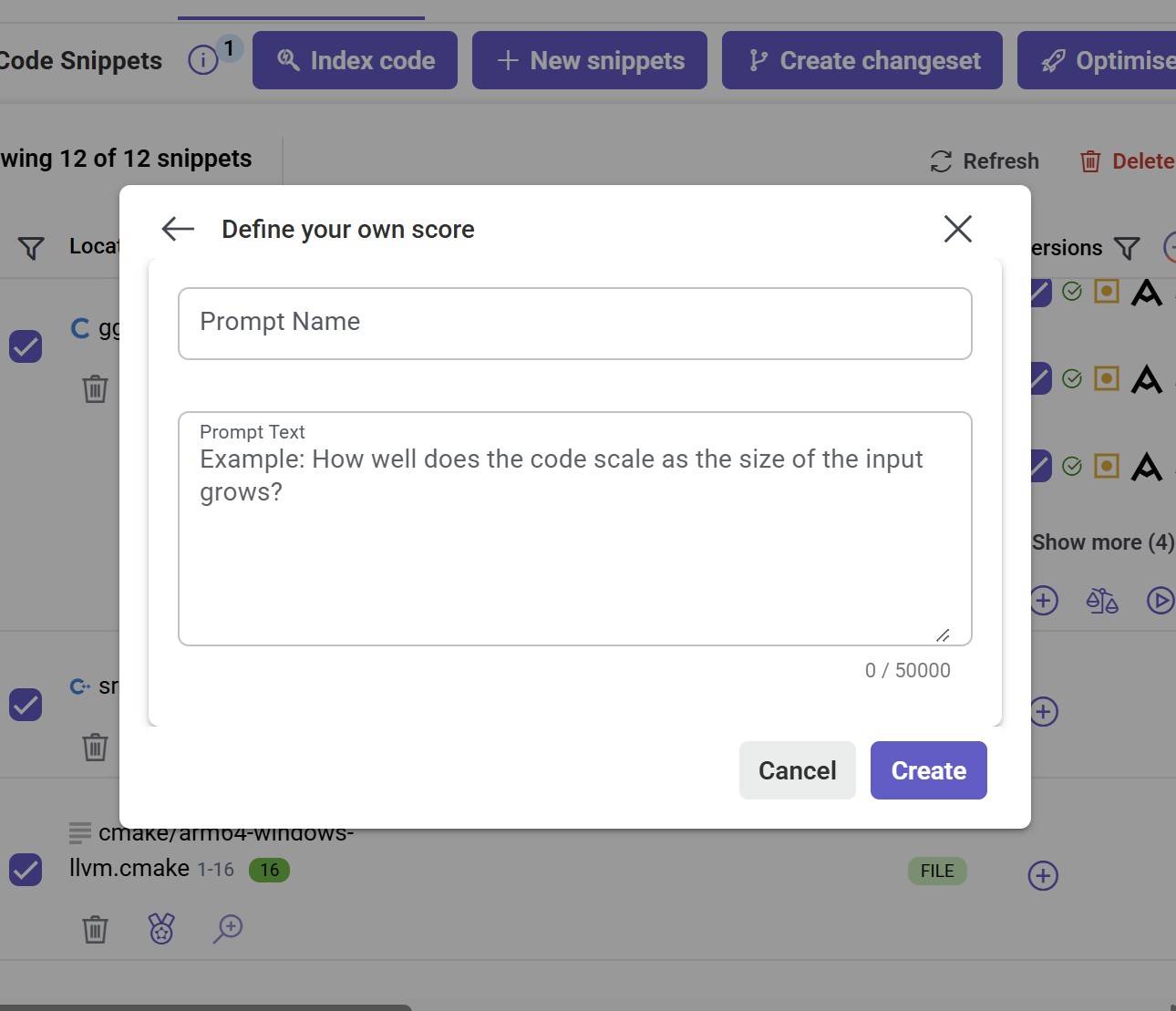

Define your own:

- Click

Define new. - Provide a name.

- Write your criteria in natural language prompts.

- Click

Custom prompts are marked with a human figure outline icon.

step 4: Choose model(s)

Select one or more models to apply.

Step 4: Run the scoring

- Click

Scoreto start evaluation. - When complete, results will appear under the Scores tab.

Understanding the results

Artemis provides several default scores:

| Score | Meaning |

|---|---|

| Optimisation | How optimized the code is. |

| Quality | Broader measure of code health and inefficiencies. |

| Security | Checks for security issues. |

| Stability | Identifies potential bugs or failure points. |

| Average Score | Average across criteria (and across LLMs if multiple were used). |

Click on any score tile for details on why a specific score was assigned.

Low scores indicate areas worth reworking. Once identified, you can move to Generate code versions to apply improvements.